Slides

Hi, I'm Myles Braithwaite and tonight I'm going to be talking about pgloader a Postgres utility to loading data from files or migrating a whole database to Postgres.

Just so you know, I'm not a DBA.

I'm a programmer and a data scientist.

I work for a company called GrantMatch. We make it easier for companies to manage their funding strategy using a large dataset of funding programs and historical grant approval to match our users with the best available grants for their business.

The application was being hosted on Digital Ocean, managed by ServerPilot, and deployed with DeployBot. I wanted to migrate to Heroku for simpler management of the application (as I'm the only technical person at the company).

Because Heroku has amazing Postgres support I wanted to migrate the MySQL database to Postgres to fit into their ecosystem better.

So the original title of this talk was going to be, How migrating a MySQL database to Postgres made me really sad.

I spent a year researching ways on how ot migrate the MySQL database to Postgres. But it seemed like all the projects that existed were outdated and finally gave up.

But then I found pgloader and all it took was a couple of commands to migrate the database.

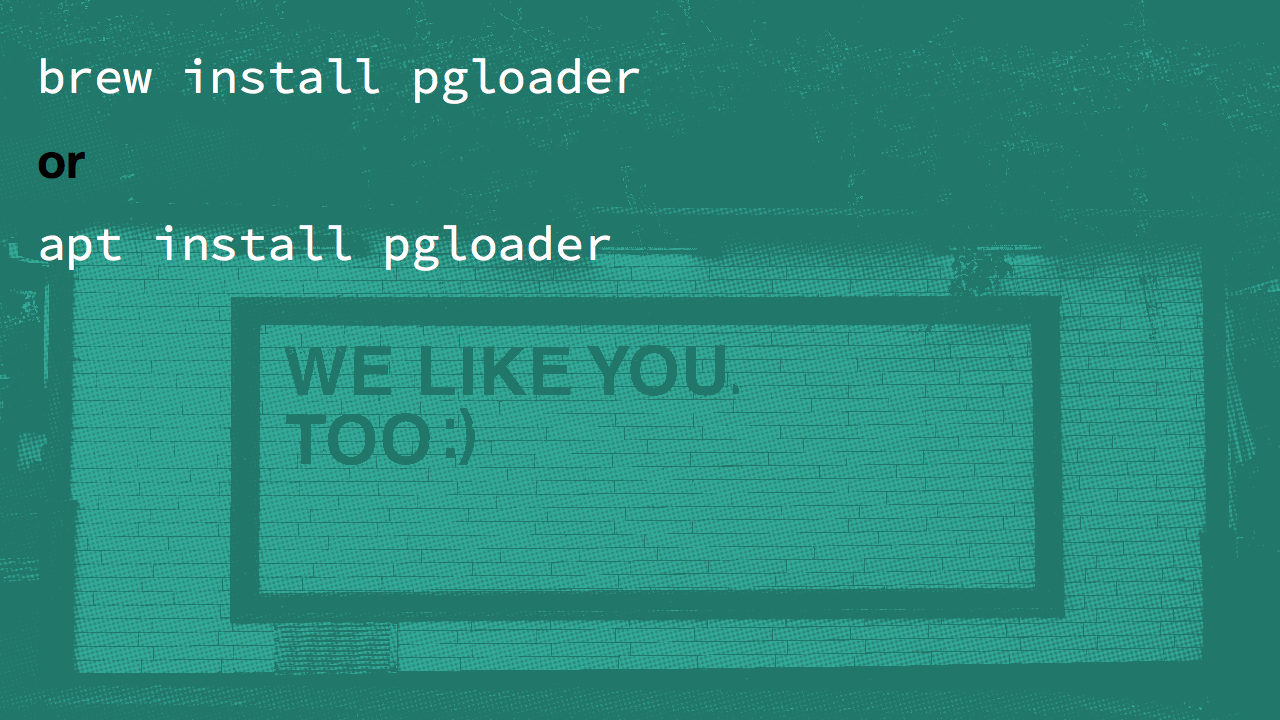

First installation of pgloader is in most major package repositories.

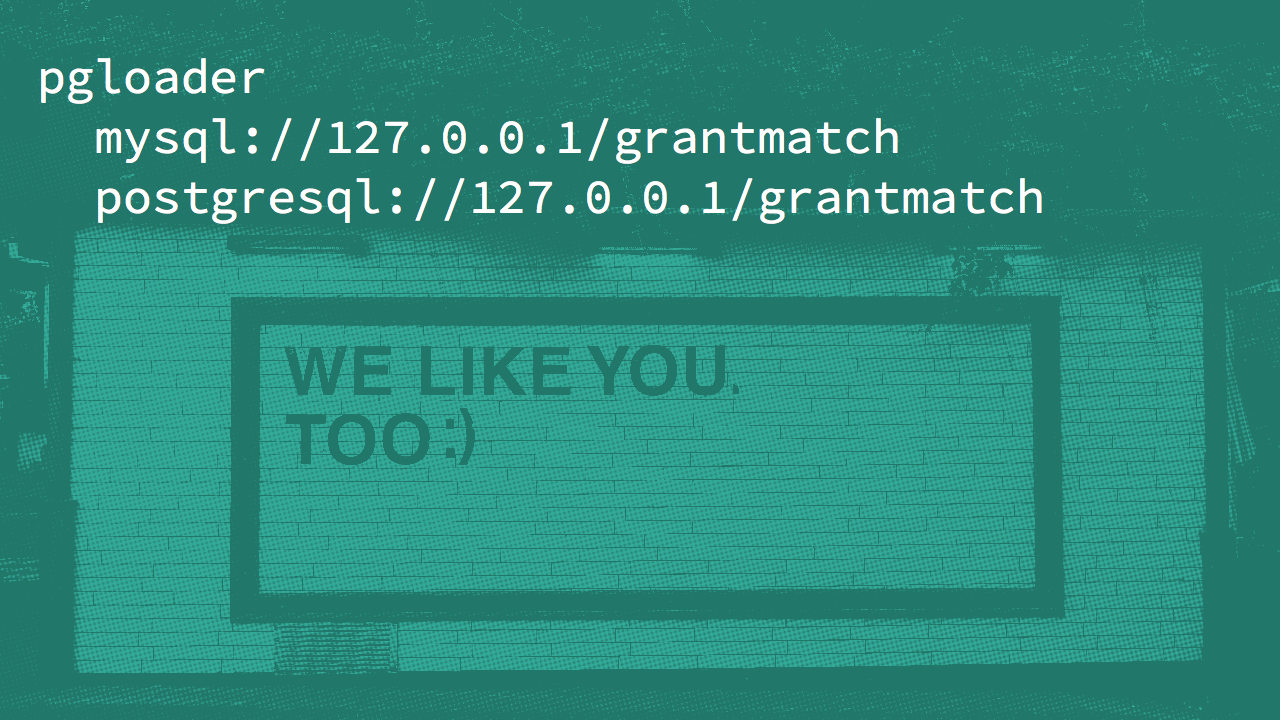

Then just saying where say where the data is we want to load and what Postgres database we want to load it in.

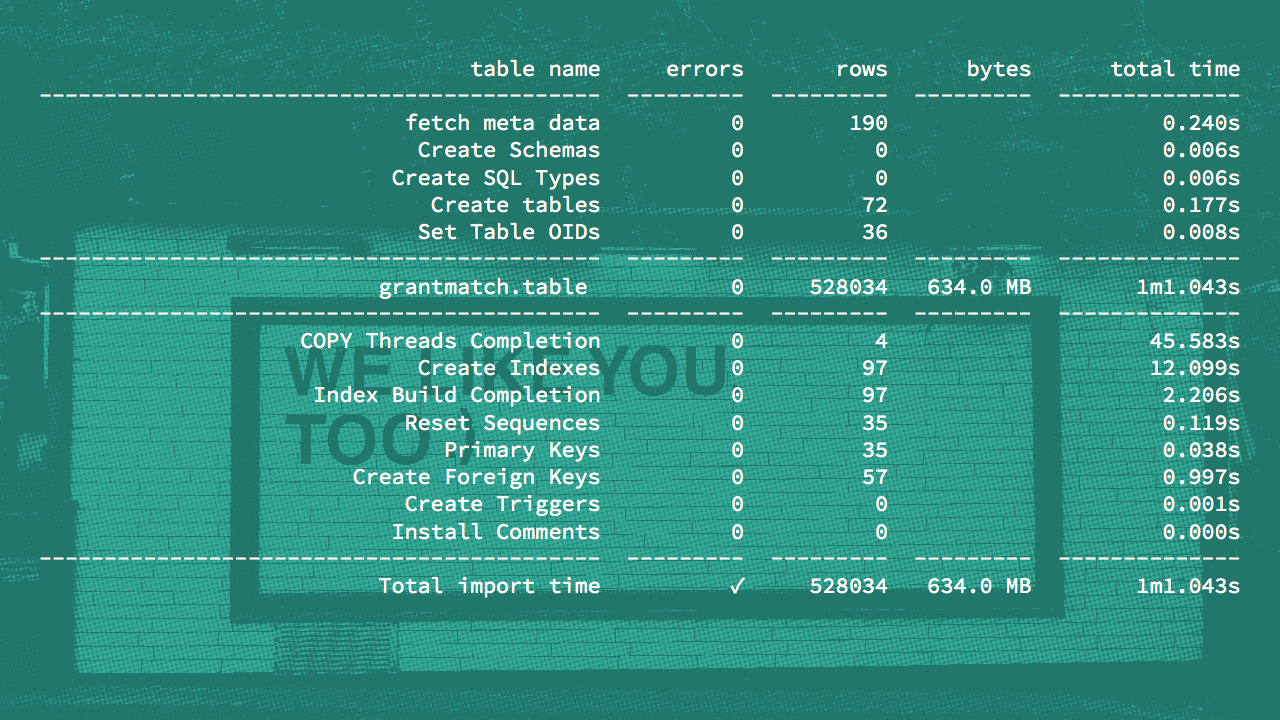

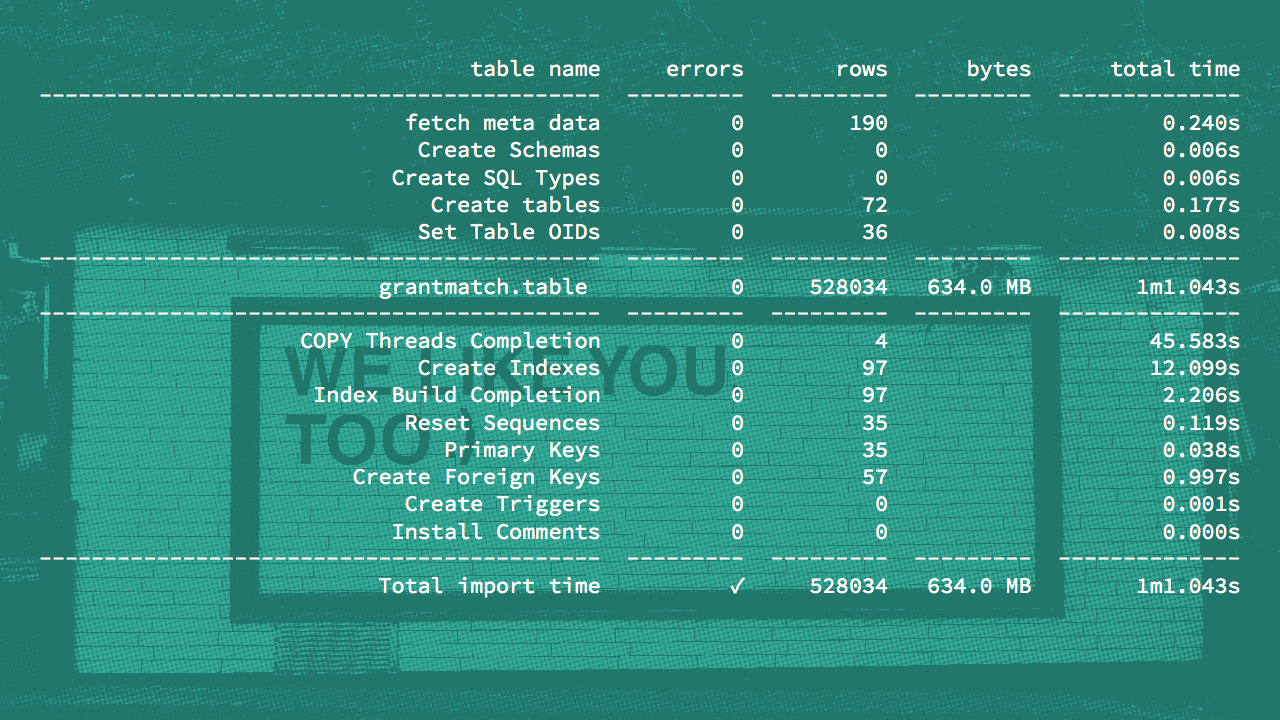

It send you back a report of how the data was migrated. and hopefully there were no errors. In my case there wasn't.

In my case there wasn't. And because we were developing the software using an ORM it took little time to migrate the application.

In my case there wasn't. And because we were developing the software using an ORM it took little time to migrate the application.

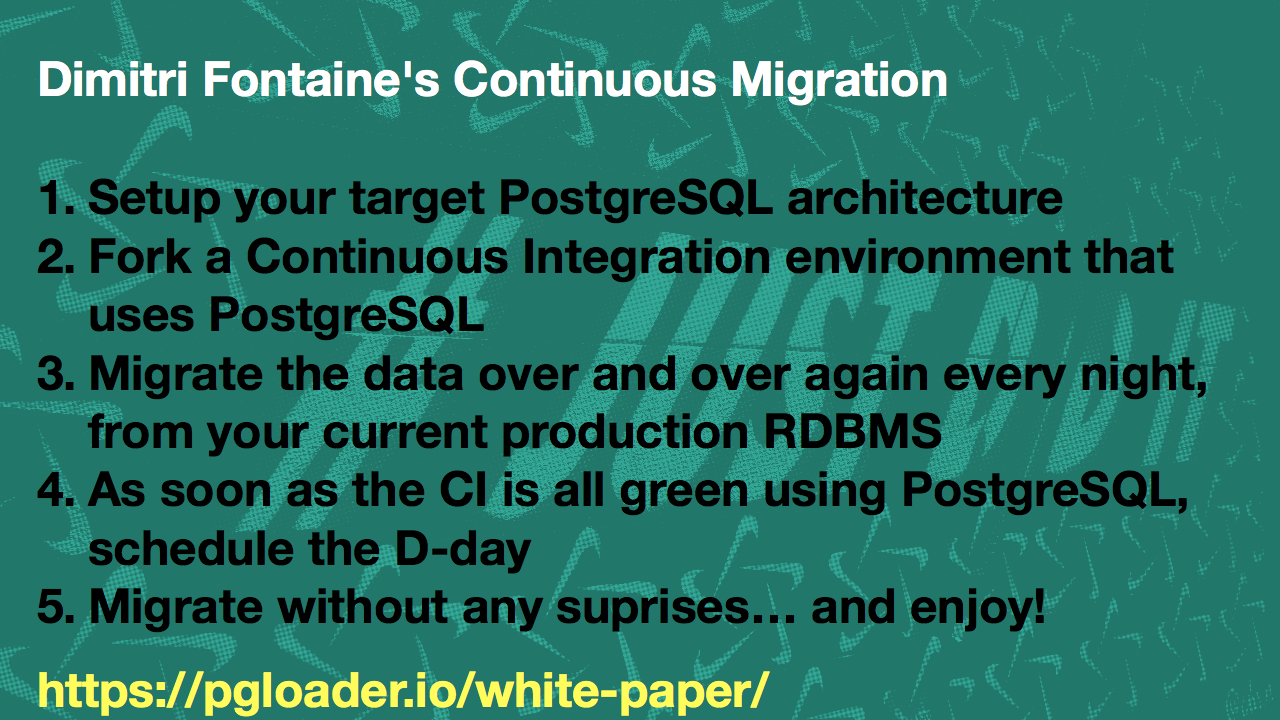

I followed the author of pgloader, Dimitri's guide to Continuous Migration.

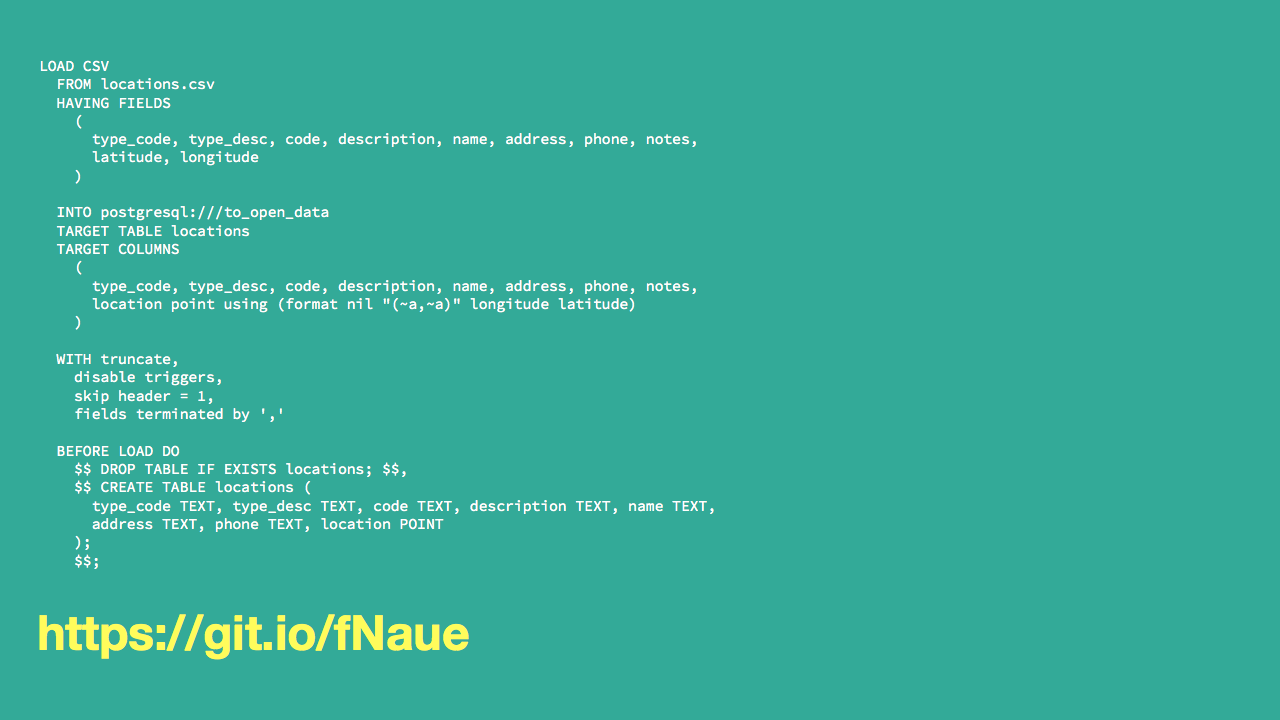

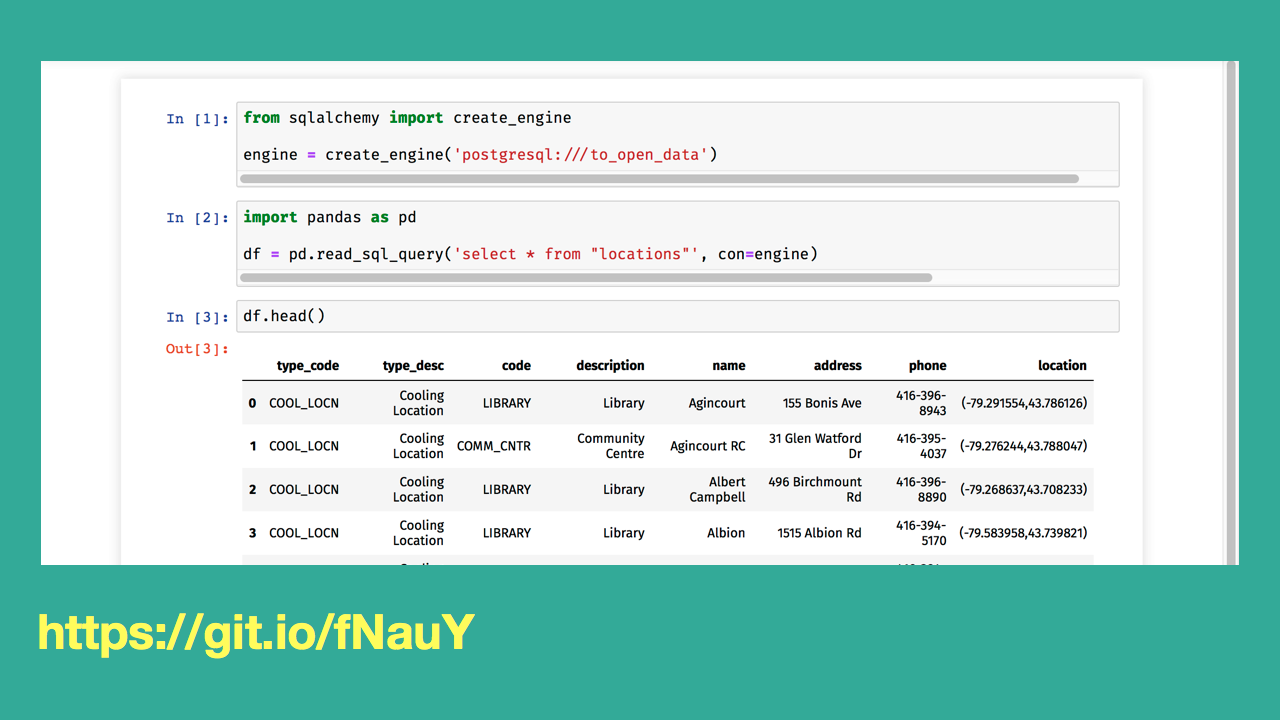

There is another way I use pgloader.

In data science there is one format:

CSV and maybe some Excel files. CSV is great but when the files get large there sometimes is corruption worries.